Designing a Data Source Discovery App - Part 22: The Power of Question Redirects

by DL Keeshin

June 2, 2025

In my last post, I covered how the kDS Discovery app tackles its database security with multiple layers of protection. Today I want to discuss one of the most innovative features we've built into the system: question redirects. This seemingly simple feature addresses one of the biggest challenges in data discovery interviews and represents a fundamental shift in how we think about collaborative knowledge gathering.

The Challenge: When "I Don't Know" Becomes a Dead End

Anyone who has conducted data source discovery interviews knows the frustration. You're halfway through a comprehensive questionnaire about data flows, security protocols, or compliance requirements when your respondent hits a wall:

"I'm not sure about our data retention policies..."

"You'd need to ask our security team about encryption standards..."

"That information would be with our compliance officer..."

In traditional surveys, this is where progress stops. The interview stalls, follow-up emails get lost in busy inboxes, and critical data discovery efforts lose momentum. Weeks can pass before the right person is identified and contacted, if they ever are.

The Solution: Intelligent Question Redirects

The kDS Discovery app transforms this roadblock into a seamless workflow with its question redirect feature. Here's how it works:

1. Frictionless Redirect Activation

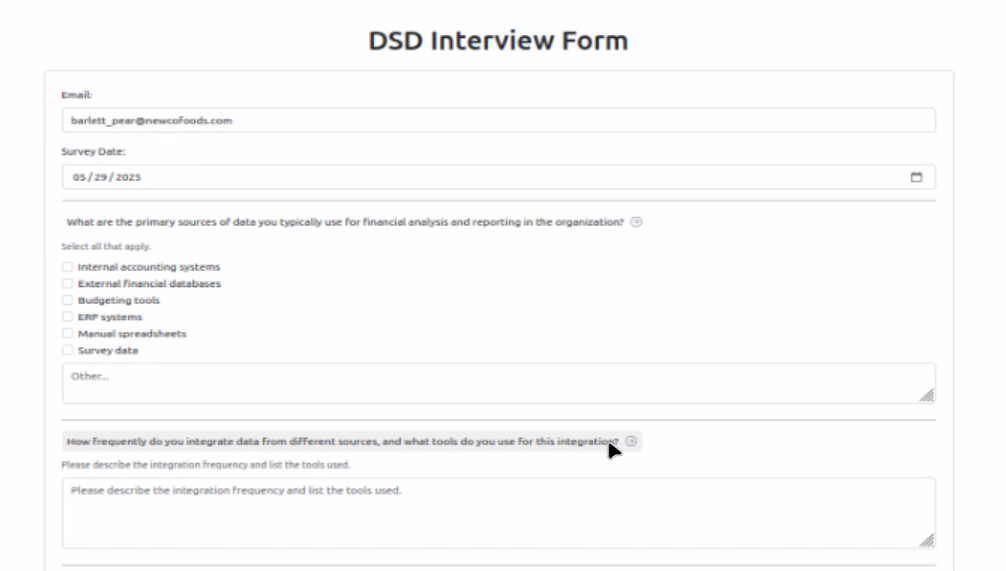

When a respondent encounters a question they can't answer, they simply click on the question header. This triggers an intuitive modal dialog that doesn't disrupt their flow through the rest of the survey.

The interview form with clickable question headers indicated by arrow icons

<div class="question-header" data-bs-toggle="modal" data-bs-target="#redirectModal"

data-question-id="{{ question_id }}" data-question-text="{{ question }}">

<label>{{ question }}</label>

<i class="bi bi-arrow-right-circle"></i>

</div>2. Structured Information Capture

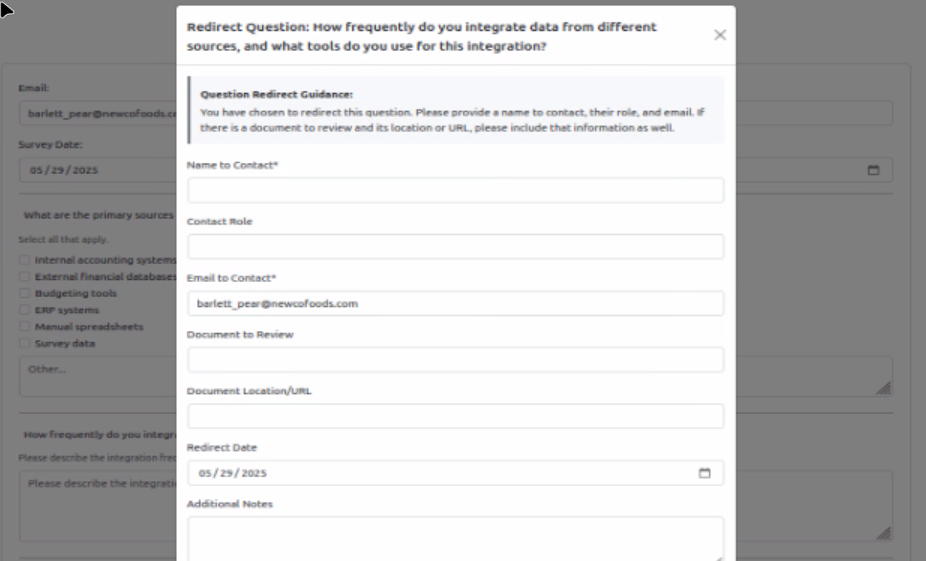

The redirect modal captures essential details in a structured format:

The question redirect modal captures structured information about who to contact

- Name to Contact: Who has the information?

- Contact Role: What's their position/responsibility?

- Email to Contact: Direct communication channel

- Document to Review: Any relevant documentation

- Document Location/URL: Where to find supporting materials

- Additional Notes: Context and specifics

3. Seamless Integration

The redirect information is saved to the database with full traceability, linking back to the specific question and interview context. The respondent can then continue with the rest of the survey without losing momentum.

Why This Approach Is Transformative

Maintains Survey Momentum

Rather than abandoning the interview when they hit an unknown, respondents can quickly redirect the question and continue. This dramatically improves completion rates and reduces the time between survey initiation and final results.

Creates Actionable Follow-Up Workflows

Each redirect generates a structured record that can be used to automatically generate follow-up emails, create task lists, track outstanding requests, and generate reports on knowledge gaps.

Preserves Institutional Knowledge

The redirect system captures not just who to contact, but also their role and relevant documentation. This creates a valuable knowledge map of who owns what information across the organization.

Enables Collaborative Data Discovery

Data discovery becomes a collaborative effort rather than a series of individual interviews. Subject matter experts can be brought in precisely where their expertise is needed.

The Technical Implementation

The implementation is elegantly simple yet powerful. The backend save_redirect() function captures all the necessary information and maintains referential integrity with the original interview context:

def save_redirect(request):

"""Save question redirect information and redirect to appropriate page"""

# Extract form data

question_id = request.form.get('question_id')

redirect_date_str = request.form.get('redirect_date')

name_to_contact = request.form.get('name_to_contact')

# ... additional fields

# Insert the redirect record with full traceability

cur.execute("""

INSERT INTO interview.question_redirect

(question_id, redirect_date, name_to_contact, contact_role,

email_to_contact, document_to_review, document_location, note)

VALUES (%s, %s, %s, %s, %s, %s, %s, %s)

RETURNING redirect_id

""", (question_id, redirect_date, name_to_contact, ...))

Real-World Impact

Case Study: Financial Services Company

Traditional Approach: A data analyst interviewing an application owner gets stuck on questions about data encryption standards. The interview ends incomplete, requiring multiple follow-up meetings with security personnel, often weeks later.

With Question Redirects: The application owner quickly redirects encryption questions to the CISO, provides their contact details and references the company's data security policy document. They then complete the remaining 80% of the survey immediately. The data governance team has a clear action item to follow up with the CISO on specific questions.

The Broader Vision

Question redirects represent more than just a survey feature – they embody a fundamental shift in how we think about organizational knowledge discovery. Instead of viewing surveys as isolated data collection exercises, we can see them as collaborative knowledge-mapping activities that:

- Surface expertise networks within the organization

- Identify knowledge gaps and documentation needs

- Create efficient pathways to the right information

- Build reusable contact networks for future discovery efforts

Looking Forward

As organizations become increasingly complex and data-driven, the ability to efficiently navigate knowledge networks becomes critical. The question redirect feature in kDS Discovery points toward a future where data governance tools don't just collect information – they actively help organizations understand and optimize how knowledge flows through their systems.

The next time you're designing a survey or interview process, consider this: What if "I don't know" wasn't the end of the conversation, but the beginning of a more intelligent one?

Join Our Beta Program

As we continue developing the kDS Data Source Discovery App with these enterprise-grade security features, we're actively seeking organizations to participate in our beta program. If your organization could benefit from streamlined data source discovery with robust security controls, we'd love to collaborate with you.

Interested? Leave a comment below or reach out directly at info@keeshinds.com.

Thank you for stopping by.