Introduction

This post is a follow-up to my previous one, where I explored building a Data Discovery Platform as a Service (PaaS) to streamline and enhance the data discovery process. In today's data-centric world, organizations are continually searching for ways to unlock the full potential of their data. Data discovery, the process of finding, collecting, and analyzing data from various sources to uncover valuable insights, is fundamental to this goal. However, traditional data discovery processes are often cumbersome and inefficient, involving manual surveys, disparate data collection methods, and time-consuming interviews. Most data discovery work today relies heavily on spreadsheets, leading to fragmented and error-prone data management. These outdated methods not only slow down the discovery process but also result in incomplete and inaccurate data insights.

To address these challenges, we introduce a Chatbot Data Discovery Interview App. This innovative application leverages the power of conversational AI to streamline the data discovery process. By automating interviews through a chatbot, organizations can efficiently gather structured data, enhance accuracy, and significantly reduce the time required for data collection. This blog post delves into the database design that supports this chatbot-based application, focusing on a wholesale distribution business as an example to illustrate the workflow and database schema.

Workflow Description

- Generating Model Interview Questions

The initial step involves generating model interview questions tailored to specific roles within various industries and business units. For example, a sales manager in a wholesale distribution business might be asked questions like:

- "What are the primary sources of data you use for your daily tasks (e.g., CRM systems, sales reports, customer feedback)?"

- "What specific tools or software do you use to acquire sales data (e.g., Salesforce, Excel, Power BI)?"

- "How do you collect customer interaction and sales performance data?"

- "Do you use any third-party data sources? If so, which ones?"

- "Are there any internal systems you rely on for data collection and analysis?"

- "How frequently do you access sales data (e.g., daily, weekly, monthly)?"

- Gathering Client Data

Once the model questions are generated, the next step is to gather client data based on industry, business unit, and role. This step ensures that the app can match the client data with the model data, allowing for more accurate analysis results.

- Conducting the Interview

The chatbot will conduct interviews with client contacts, asking the generated data discovery questions. The responses will be stored in a PostgreSQL database.

- Data Analysis and Follow-Up

After collecting the data, it will be analyzed to derive insights. Based on the analysis, follow-up interviews may be scheduled to gather more detailed information or clarify responses.

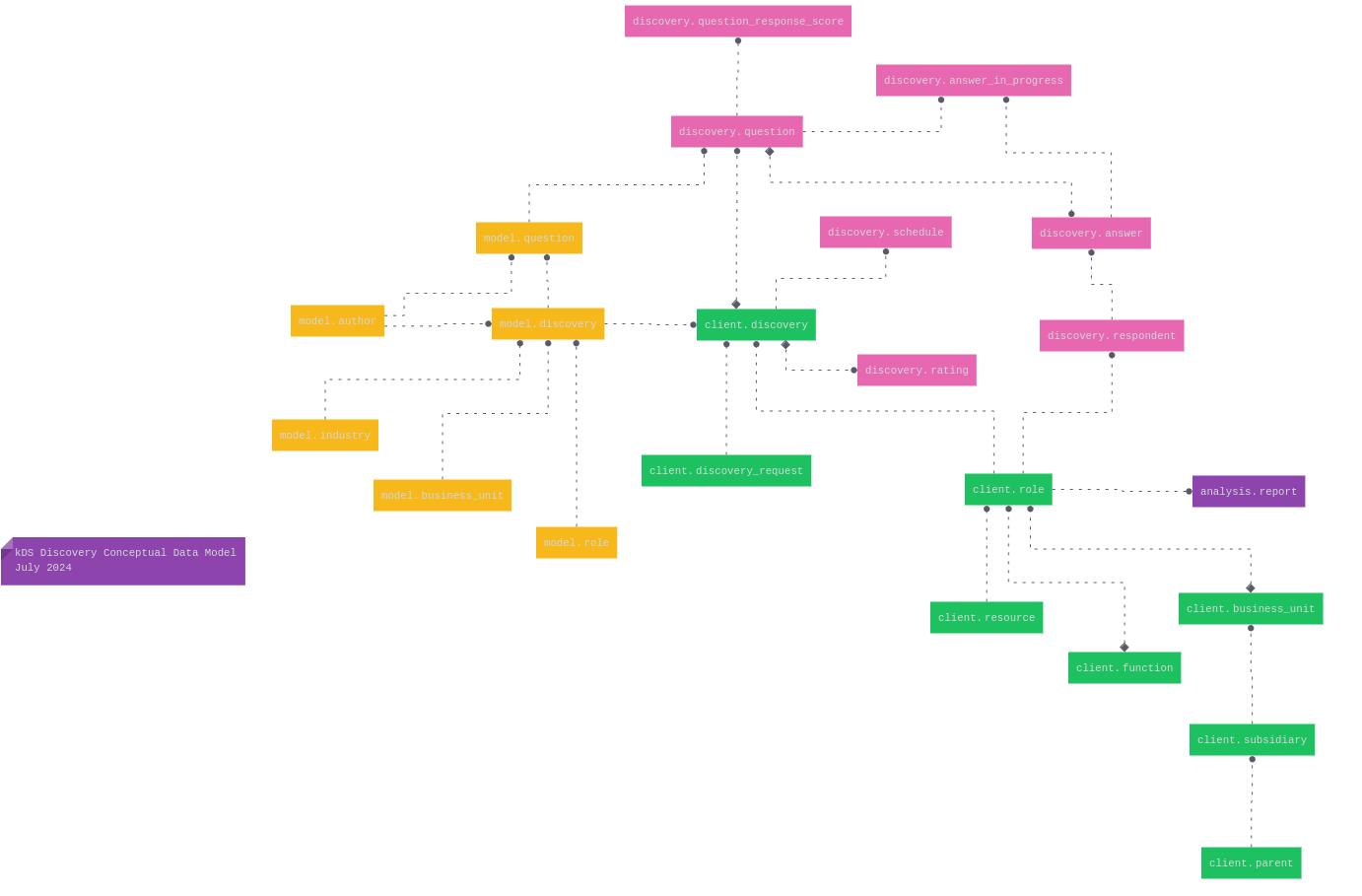

Database Design

The database design comprises several schemas and tables to organize and store the data effectively. Below is an overview of the key tables and their purposes, with references to the detailed schema definitions provided.

Schemas

- admin: For administrative data.

- analysis: For storing analysis results.

- client: For client-related data.

- discovery: For discovery process data.

- model: For storing model questions and related metadata.

- reference: For reference data.

Tables and Their Purposes

Model Tables

- model.role: Stores roles within the model, such as "Sales Manager."

- model.business_unit: Stores information about different business units.

- model.industry: Stores industry information using NASIC codes.

- model.author: Contains data about authors who create the questions.

- model.discovery: Links roles, business units, industries, and authors to specific interview models.

- model.question: Stores the actual interview questions and their metadata.

Client Tables

- client.role: Stores roles and associated information for clients.

- client.discovery: Contains client responses to interview questions and links to model discovery.

Discovery Tables

- discovery.schedule: Tracks interview schedules.

- discovery.rating: Stores ratings and conditions related to responses.

- discovery.question: Contains detailed information about each question, including type, tags, and metadata.

- discovery.question_response_score: Links questions to possible response scores.

- discovery.answer: Stores actual answers provided by respondents.

- discovery.answer_in_progress: Tracks answers in progress, including partial responses.

Integration with RASA and PostgreSQL

The chatbot will be built using the RASA framework, which integrates seamlessly with a PostgreSQL database. The process involves:

- Storing Questions in PostgreSQL: Instead of using a YAML file, questions are stored in the PostgreSQL database. This allows for dynamic generation and retrieval of questions based on the user's industry, business unit, and role.

- Custom Actions in RASA: Custom actions in RASA will query the PostgreSQL database to fetch relevant questions and save user responses.

- Data Storage: User responses will be stored in the client.discovery table, allowing for structured data collection and easy retrieval for analysis.

- Data Analysis: Post-interview, the collected data will be analyzed to generate insights. This may involve running SQL queries directly on the PostgreSQL database or using external analysis tools connected to the database.

Conclusion

Designing a robust database is crucial for supporting a chatbot-based data discovery interview app. By organizing data into logical schemas and tables, ensuring data integrity with proper constraints, and using UUIDs for global uniqueness, the database can efficiently handle the complex workflow of generating model questions, collecting client data, and storing responses for analysis. This setup not only streamlines the data discovery process but also ensures accurate and actionable insights for the business.

As always, thanks for stopping by. Let me know what you think.