Designing a Data Source Discovery App - Part 14: Generating Smart Test Data with LLMs

by DL Keeshin

March 17, 2025

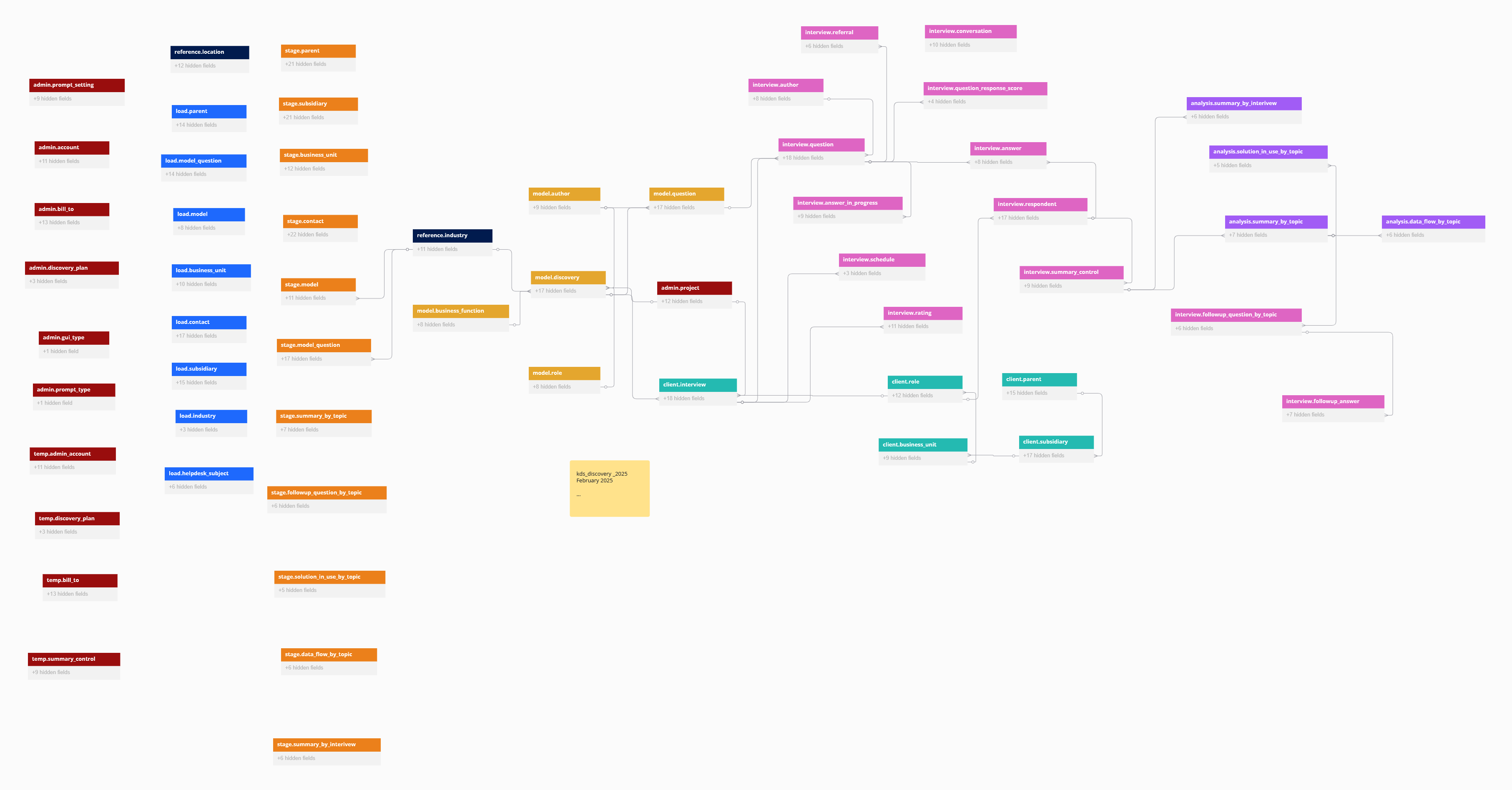

In my last couple of posts, I covered the kDS Discovery app's design strategy for summarizing interview data. We looked at how structured responses and automated summaries help make sense of interviews. Now, let's switch gears and talk about using large language models to generate context-aware test data.

Instead of relying on static datasets or spending time writing out sample responses by hand, we use a large language model to create realistic, context-aware test data on the fly. This approach makes testing faster, more accurate and reduces manual effort.

Why LLMs Are a Game-Changer for Test Data Generation

Creating realistic test data is a challenge, especially for dynamic apps like kDS Discovery. Traditional methods rely on static datasets or manually crafted responses, which quickly become outdated. Large language models (LLMs) like GPT-4 offer a smarter solution, generating diverse, context-aware test data on demand.

Automating Test Data with Python and GPT-4

The Python script, generate_test_answer.py leverages GPT-4 to create realistic test answers for interview questions stored in the kDS Discovery test database. This approach not only streamlines the testing process but also ensures that the responses reflect real-world scenarios based on industry descriptions and user roles.

How It Works

- Fetch Questions from the Database – The script queries the temp_vw_lookup_for_answer_text view to retrieve a set of test questions.

- Generate a Context-Aware Prompt – Each question is paired with an industry description and user role to craft a detailed prompt for GPT-4.

- Request a Response from GPT-4 – The LLM generates a structured JSON response containing the question ID and a sample answer.

- Validate and Store the Data – The script validates the generated response, ensuring that the UUIDs match, before inserting the test data into the database via a stored procedure.

A Faster, Better, Cheaper Approach

- Faster – The script automates the entire process, drastically reducing the time needed to generate and validate test data compared to manual methods.

- Better – Unlike static datasets, GPT-4 responses adapt to the context of each question, making the test data more relevant and ensuring consistency with real-world use cases.

- Cheaper – Automating test data generation reduces the need for hiring additional staff to manually create and validate test cases. Additionally, it minimizes the cost of maintaining and updating static datasets since GPT-4 can dynamically generate fresh responses on demand. It's also likely cheaper than using a third-party testing tool, which often comes with licensing fees and integration costs.

- Error Handling & Validation – The script ensures that each generated response is correctly formatted and adheres to the expected database structure.

Code Breakdown

Fetching Data from PostgreSQL

query = """

SELECT email_, role_, respondent_id, industry_description,

subsidiary_, business_unit, question_text, question_id

FROM temp.vw_lookup_for_answer_test

ORDER BY sort_order LIMIT 20;

"""This SQL query retrieves a sample of 20 questions, ensuring that the test data covers a variety of topics.

Prompting GPT-4 for Answers

prompt = (

f"Based on the following industry_description and role, please generate a sample answer..."

)The prompt construction ensures that GPT-4 understands the industry context and expected response format.

Storing the Generated Test Data

cur.execute("""

CALL interview.up_insert_answer(%s, %s, %s, %s, %s);

""", (question_id, respondent_id, json.dumps(sample_answer), answer_date, source))This step inserts the generated answers into the database, making them available for testing.

Final Thoughts

Leveraging GPT-4 for test data generation is an innovative approach that simplifies and improves the accuracy of test cases in applications like kDS Discovery. By automating this process, we can ensure that the test data remains relevant, scalable, and reflective of real-world scenarios.

As LLMs continue to evolve, their ability to generate structured and meaningful responses will become an invaluable tool for developers looking to streamline testing and data generation workflows.

Also, as mentioned previously, my company, kDS LLC, is actively seeking organizations interested in acquiring a beta version of the kDS Data Source Discovery App. If your organization is interested, please let us know using the contact form below. We'd love to collaborate.

As always, thanks for stopping by!