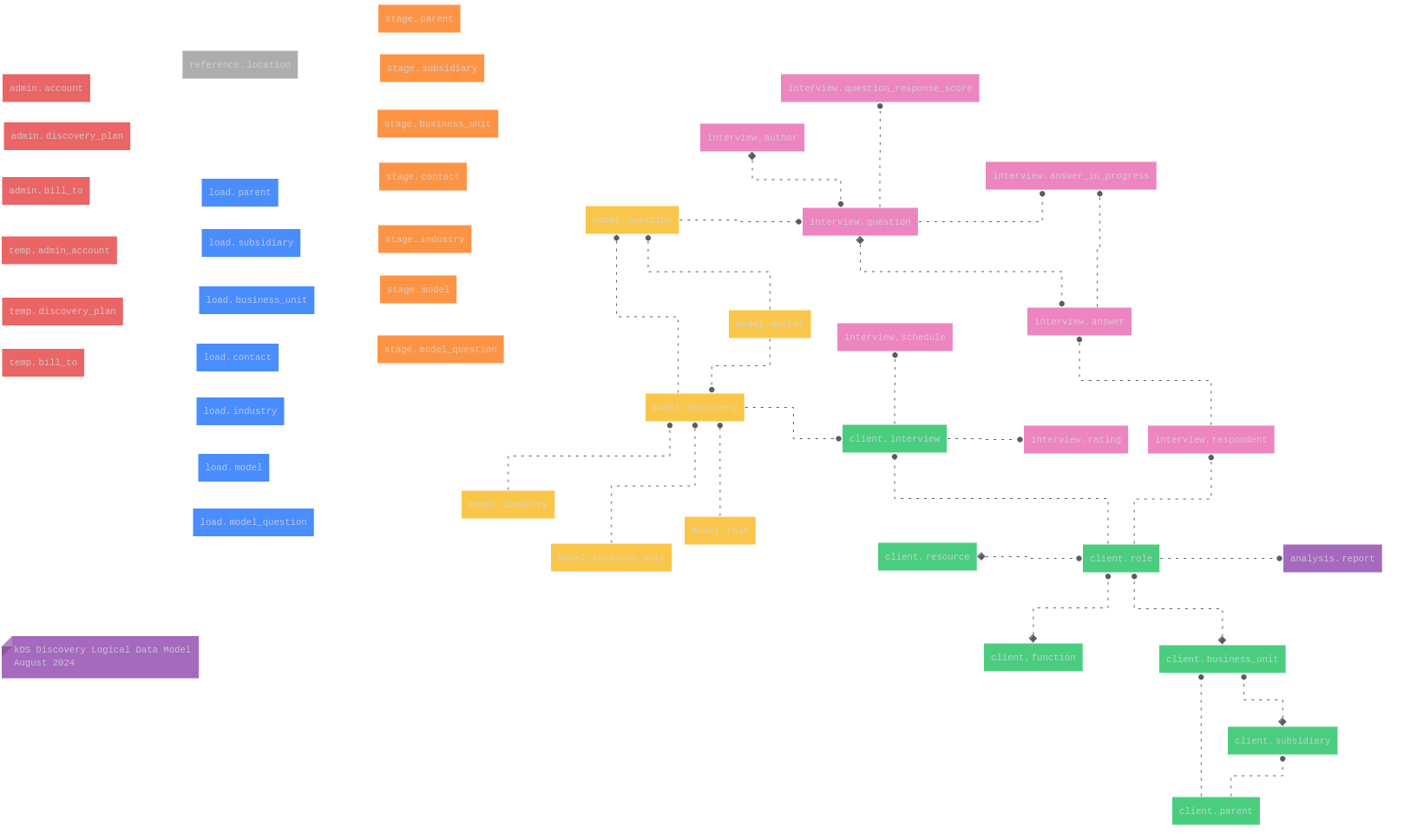

In my previous blog post, I laid out the logical data design for the Chatbot interview data discovery app. Since then, I have made several important changes and have continued building out the design. Here is a summary of what was done:

- Two more schemas were added:

- load (blue in the above diagram)

- stage (orange in the above diagram)

- The schema “discovery” was renamed to “interview.” While the entire process is data “discovery,” these tables store interview respondents and answers. Thus, “interview” more accurately reflects their purpose.

So, right now, the design contains the following schemas:

| Schema | Color | Purpose |

|---|---|---|

| admin | red | administrative data. |

| analysis | purple | storing analysis results. |

| client | green | client-related data. |

| interview | rose | interview respondents and answer data. |

| load | blue | initial data loads from external sources. |

| model | yellow | model questions and related metadata. |

| reference | grey | reference data like “location.” |

| stage | orange | processed data from the initial load schema. |

You can now see the preliminary “physical” database structure on GitHub.

Keep in Mind

-

The “load” schema is intended to load external client or model data generated from an external source, like client data initially gathered from a web front end or model data generated from a large language model (LLM) like ChatGPT.

- Columns in the tables defined in this “load” schema are primarily character data types, except for a few system columns like “create date,” which are loaded automatically with default values. Using all character data simplifies the loading process by reducing or mostly eliminating errors. If the length of the columns is large enough, data should get loaded quickly. There are no primary or foreign keys defined in this schema. The idea here is to easily “just get the data in.”

- Besides simplicity, this design is scalable. This data could potentially be stored outside of a relational database model. A NoSQL database like AWS DynamoDB, Azure Cosmos DB, or Google BigQuery could work. These solutions would be a consideration and likely a requirement if the amount of data that needed loading was large—probably in the thousands per second range.

-

In the “stage” schema, data is assigned specific data types like date or date-time, numeric values, etc. Any errors can be written to stage error tables. It is also a good place to generate any natural keys that reflect the keys that are part of the next step in data transformation.

Natural Keys vs. Surrogate Keys

This raises the age-old question of whether to use natural or surrogate keys. Why not use both? Early in the data transformation, it makes sense to use a natural key. Here’s why:

Natural Keys

Definition: A natural key is a key that is derived from the data itself, typically a field or a combination of fields that naturally and uniquely identify a record in a table. Examples of natural keys include Social Security Numbers (SSNs), email addresses, or VINs (Vehicle Identification Numbers).

- Directly related to the business domain.

- Often carry meaningful information about the entity they identify.

- Can sometimes change if the underlying business data changes (e.g., a person changes their email address).

- Generally recognized and used across different systems.

Surrogate Keys

Definition: A surrogate key is an artificially created key that serves as a unique identifier for each record in a table, independent of the data in the record. Surrogate keys are usually sequential integers or UUIDs (Universally Unique Identifiers).

- Not derived from business data, hence no inherent meaning.

- Remains stable and unchanged even if the business data changes.

- Simplifies join operations in a relational database.

- Often used as primary keys in data warehouses and databases because they are immutable and efficient.

Why Use Natural Keys During Staging and Assign Surrogate Keys Later?

Natural Keys in Staging

- Data Integrity and Validation: Natural keys help ensure that the data being staged is consistent with the source systems. Since they are directly tied to the business domain, they make it easier to validate the integrity and uniqueness of records as they are ingested. Natural keys make it easier to detect duplicate records or inconsistencies in the data, which is crucial during the early stages of data processing.

- Traceability: Using natural keys in the staging phase allows for easy traceability back to the source systems. This traceability is vital for debugging and resolving issues during the ETL (Extract, Transform, Load) process. It ensures that each record in the staging area can be mapped back to its origin in the source systems.

- Flexibility in Data Integration: During the staging phase, data from multiple source systems often needs to be integrated. Natural keys provide a common reference point for merging data from different systems. This flexibility is especially valuable when integrating data from disparate sources that may not have a common surrogate key structure.

Surrogate Keys in Final Tables

- Performance Optimization: Surrogate keys, being simpler and often numeric, are more efficient for indexing and joining tables. They optimize query performance, particularly in large datasets where joins on complex natural keys can be slow.

- Immutable Identifiers: Surrogate keys provide a stable and unchanging identifier for each record, which is critical in scenarios where records need to be referenced over time, such as in data warehousing. This stability ensures that historical data remains accurate and consistent.

- Reduction of Data Redundancy: Surrogate keys help reduce data redundancy by creating a single, unique identifier for each record. This reduction in redundancy is particularly important when dealing with complex relationships in normalized database schemas.

This post outlines the ongoing development of a database designed to support a Chatbot interview data discovery app, highlighting key design changes such as the introduction of new schemas and renaming existing ones for better clarity. The strategic use of natural keys during the staging phase leverages business-related identifiers for validation, traceability, and integration. Subsequently, surrogate keys are assigned in the final tables to optimize performance, ensure data stability, and maintain flexibility. This hybrid approach balances data integrity with system performance, ensuring that the database design meets both functional and technical requirements while supporting the app's functionality in a scalable and efficient manner.

As always, thanks for stopping by.