Designing a Database to Support a Chatbot Interview App – Part 3: Modeling Data

by DL Keeshin

September 19 2024

In my recent blog series, I’ve been exploring the design of a relational database to manage data for a chatbot data discovery interview app. So far, I’ve taken a traditional relational database design approach.

Relational database modeling provides a structured foundation by organizing data into tables, ensuring data integrity, and supporting efficient querying. This structure is crucial for managing and storing the information that the chatbot collects. However, when it comes to generating meaningful questions that help discover important data sources and processes, LLM-based modeling plays an essential role.

While relational databases offer a rigid, schema-based framework, modeling data with a large language model (LLM) establishes a starting point for generating insightful, context-driven questions. These questions allow the app to identify key data sources and business processes, adapting to different industries or use cases. By simulating human-like conversations, LLMs enhance the chatbot’s ability to conduct data discovery interviews, complementing the structured data management of relational models.

Generating Model Data

To get the data modeling process rolling, I decided to focus on one area that I have experience in—wholesale distribution.

I began by prompting the LLM with general questions to establish a broad context for the dataset, gradually moving toward more granular details. This method allowed the LLM to first capture the high-level structure of the industry before diving into more specific data points.

Once the LLM generated relevant data, I reviewed it for accuracy and relevance. I then provided the LLM with a predefined SQL table structure and asked it to generate SQL insert statements, enabling me to load the structured data directly into the relational model. Using both ChatGPT and Gemini, here’s how the process unfolded.

Generating Parent Company and Business Units

First, I wanted some context, so I prompted the LLM with:

Next, I asked:

Generate a PSQL (PostgreSQL) insert statement for a model parent (company) using the following table structure "CREATE TABLE load.parent (name_ varchar(96) NOT NULL, organization_type varchar(32) NULL, ticker_ varchar(8) NULL, nasic_code varchar(8) NULL, product_service varchar(128) NULL, annual_revenue numeric(15, 2) NULL, employee_total int NULL, website_ varchar(92) NULL, location_ varchar(48) NULL, source_ varchar(48) NOT NULL, create_date date NOT NULL, created_by varchar(92) NOT NULL, modified_date date NULL, modified_by varchar(92) NULL);"

It produced the following SQL statement:

INSERT INTO load.parent

(

name_, organization_type, ticker_, nasic_code, product_service, annual_revenue,

employee_total, website_, location_, source_, create_date, created_by

)

VALUES

(

'Global Distributors Inc.', 'Wholesale Distributor', 'GDI', '561910',

'Packaging and Labeling Services', 15000000.50, 120, 'www.globaldistributors.com',

'New York, NY', 'Data Source 1', CURRENT_DATE, 'system_admin'

);

Next, I asked;

"What might business units look like for this distributor with a NASIC code of 561910?"

Followed by,

Generate SQL insert statements for these business units using the following table structure "CREATE TABLE load.business_unit ( name_ varchar(96) NOT NULL, parent_ varchar(96) NOT NULL, subsidiary_ varchar(96) NULL, nasic_code varchar(8) NOT NULL, model_reference varchar(255) NOT NULL, create_date date NOT NULL, created_by varchar(92) NOT NULL, modified_date date NULL, modified_by varchar(72) NULL, source_ varchar(72) NOT NULL);"

It returned the following SQL statement:

INSERT INTO load.business_unit

(

name_,

parent_,

subsidiary_,

nasic_code,

model_reference,

create_date,

created_by,

source_

)

VALUES

(

'Sales and Marketing Unit',

'Global Distributors Inc.',

NULL,

'561910',

'',

CURRENT_DATE,

'system_admin',

'Data Source 1'

);

Generating Contacts and Job Titles

I asked,

Then requested,

Generate SQL insert statements for these contacts and job titles using the following table structure, "CREATE TABLE load.contact ( email_ varchar(128) NOT NULL, parent_ varchar(96) NOT NULL, subsidiary_ varchar(96) NULL, business_unit varchar(96) NOT NULL, nasic_code varchar(8) NOT NULL, title_ varchar(64) NULL, role_ varchar(50) NOT NULL, respondent_ varchar(12) NOT NULL, project_sponsor varchar(12) NOT NULL, first_name varchar(32) NULL, last_name varchar(92) NOT NULL, location_ varchar(48) NULL, phone_ varchar(16) NULL, create_date date NOT NULL, created_by varchar(92) NOT NULL, modified_date date NULL, modified_by varchar(92) NULL);"

Out came,

INSERT INTO load.contact

(

email_,

parent_,

subsidiary_,

business_unit,

nasic_code,

title_,

role_,

respondent_,

project_sponsor,

first_name,

last_name,

location_,

phone_,

create_date,

created_by,

modified_date,

modified_by

)

VALUES

(

'sales.manager@globaldistributors.com',

'Global Distributors Inc.',

NULL,

'Sales and Marketing Unit',

'561910',

'Sales Manager',

'Manager',

'Yes',

'No',

'Michael',

'Johnson',

'Los Angeles, CA',

'555-456-7890',

CURRENT_DATE,

'system_admin',

NULL,

NULL

);

You should have an idea how this works now.

Identifying Business Keys

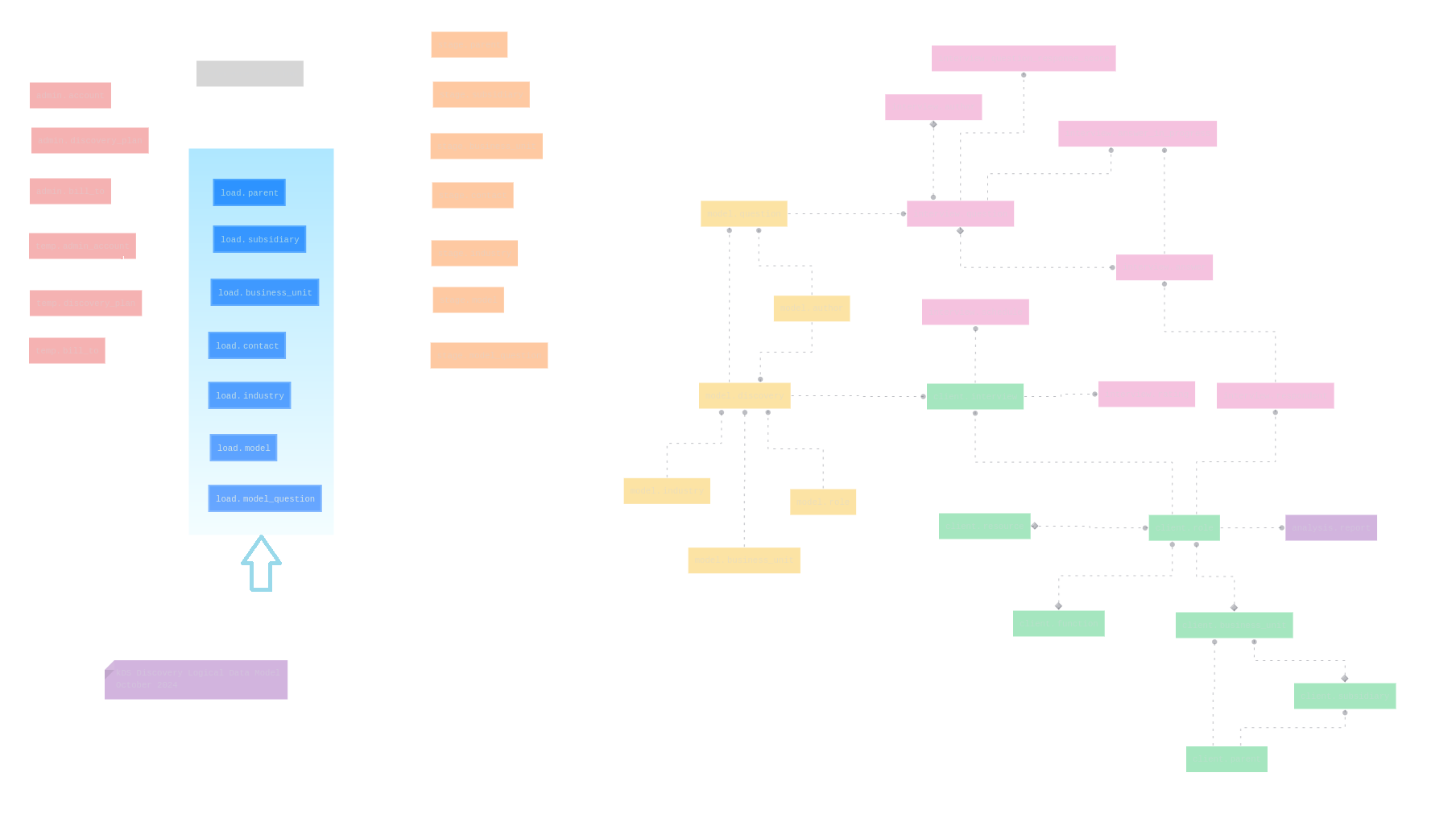

Identifying business keys is crucial in relational database design, as they ensure data integrity and help efficiently retrieve and link related records across tables. Looking at the database ERD diagram, I saw that the job role, business unit, and industry code would be a good "candidate" business key for managing the model-questions. Needing to generate the keys,I prompted.

Using the following load.model and load.contact tables, generate an INSERT statement to populate nasic_code, biz_unit, role_ columns in the load.model table, with DISTINCT nasic_code, business_unit, and role_ combinations from the load.contact table. Use the value 'system_admin' for created_by and 'model_01' for source_ columns. Here is the load.model table, "CREATE TABLE load.model ( nasic_code varchar(8) NOT NULL, biz_unit varchar(96) NOT NULL, role_ varchar(96) NOT NULL, create_date date NULL, created_by varchar(92) NOT NULL, modified_date date NULL, modified_by varchar(72) NULL, source_ varchar(72) NOT NULL );" Here is the load.contact table, "CREATE TABLE load.contact ( email_ varchar(128) NOT NULL, parent_ varchar(96) NOT NULL, subsidiary_ varchar(96) NULL, business_unit varchar(96) NOT NULL, nasic_code varchar(8) NOT NULL, title_ varchar(64) NULL, role_ varchar(50) NOT NULL, respondent_ varchar(12) NOT NULL, project_sponsor varchar(12) NOT NULL, first_name varchar(32) NULL, last_name varchar(92) NOT NULL, location_ varchar(48) NULL, phone_ varchar(16) NULL, create_date date NOT NULL, created_by varchar(92) NOT NULL, modified_date date NULL, modified_by varchar(92) NULL );"

It generated,

INSERT INTO load.model (nasic_code, biz_unit, role_, create_date, created_by, source_)

SELECT DISTINCT nasic_code, business_unit, role_, CURRENT_DATE, 'system_admin', 'model_01'

FROM load.contact;

Interview Questions

Here's it where it gets really interesting. I prompted;

Once the questions returned, I followed with

Generate SQL insert statements for each question, using the following table structure, "CREATE TABLE load.model_question ( nasic_code varchar(8) NOT NULL, business_unit varchar(96) NOT NULL, role_ varchar(96) NOT NULL, model_topic varchar(96) NOT NULL, topic_ varchar(96) NOT NULL, subtopic_ varchar(96) NULL, syntax_ varchar(1024) NOT NULL, help_ varchar(1024) NULL, type_ varchar(32) NOT NULL, sort_order smallint NOT NULL, location_reference varchar(96) NULL, create_date date NOT NULL, modified_date date NULL, source_ varchar(96) NULL );"

It produced twenty insert statement with questions. Like this

INSERT INTO load.model_question

(

nasic_code, business_unit, role_, model_topic, topic_, syntax_, help_,

type_, sort_order, create_date, source_

)

VALUES

(

'561910', 'Sales and Marketing Unit', 'Manager', 'Primary Data Sources',

'Data Sources', 'What are the primary data sources you rely on to perform your job in the Sales and Marketing Unit?',

'Ask the manager to identify key data sources.', 'Open-ended', 1, CURRENT_DATE, 'InterviewScript'

);

Final Thoughts

In this post, I’ve explored how traditional relational database design can be integrated with the dynamic, context-driven modeling capabilities of large language models (LLMs). By combining the structure and integrity of relational databases with the flexibility of LLM-generated insights, we can build a more adaptive and efficient chatbot for conducting data discovery interviews.

This hybrid approach allows the chatbot to store and manage data reliably and at scale, while dynamically adjusting to the nuances of different industries and organizational structures. As I continue refining this system, the focus will be on optimizing LLM prompts and scaling the chatbot to support a wider range of business use cases.

Next Steps

The immediate next step is to implement a staging process to validate the generated data, ensuring that appropriate data types are assigned and business keys are defined before inserting the data into the database. This will enhance data integrity and accuracy, improving the overall reliability of the system.

If you want to try creating and loading the kds_discovery database with all of the data described here, see "Database Installation" in the README file here.

Looking ahead, automating the entire process—including data generation, validation, and insertion—using an LLM API and Python will be a future goal.

As always, thank you for stopping by.