Designing a Database to Support a Chatbot Interview App – Part 4: Staging the Data Discovery Model

by DL Keeshin

October 2, 2024

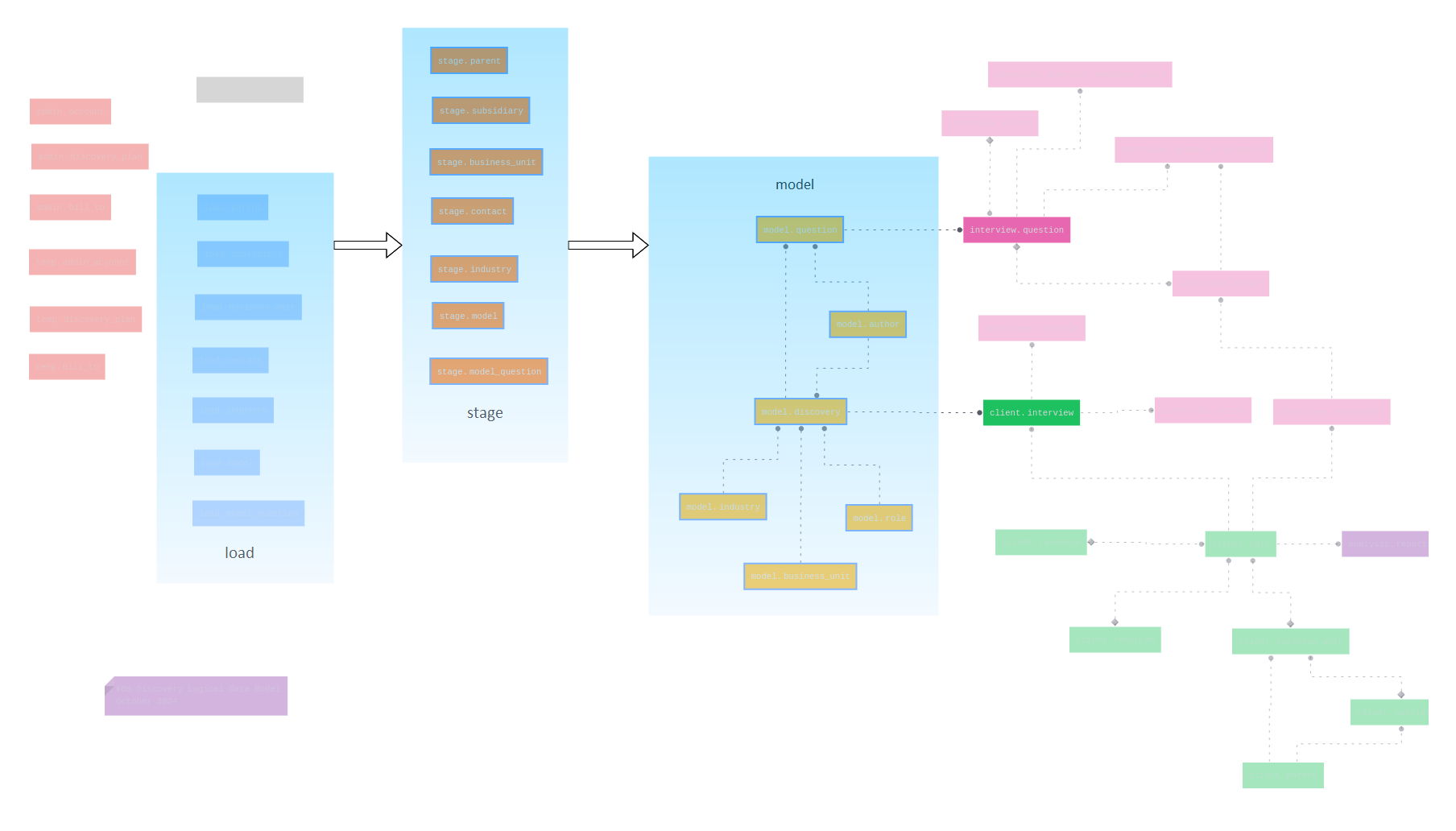

In my last post, I demonstrated how I used generative AI to create data discovery interview questions and explained how I loaded the questions into the load schema tables, which are shown in blue in the above diagram.

Staging for Assigning Data Types and Managing Keys

Today, I'll discuss the process of staging data. So far, I’ve primarily used character data types for the columns in the load schema tables. This approach simplifies data loading and reduces errors. The staging process is where we assign database-specific data types, validate data, and define natural keys to make the data easier to work with in the future.

Adding Columns and Preparing the Staging Area

As part of the staging process, it’s useful to store additional metadata, such as the source of the data. This helps track the origin of the records and understand the context in which they were created. I missed adding the source_ column to a few tables in the original design. Here's how I added it using a psql script:

-- Adding source_ column to multiple tables

ALTER TABLE load.industry ADD COLUMN source_ varchar(96) NULL;

ALTER TABLE stage.industry ADD COLUMN source_ varchar(96) NOT NULL;

ALTER TABLE stage.contact ADD COLUMN source_ VARCHAR(96) NOT NULL;

ALTER TABLE stage.model ADD COLUMN name_ VARCHAR(64) NOT NULL;

ALTER TABLE model.role ADD COLUMN source_ VARCHAR(96) NOT NULL;

ALTER TABLE model.discovery ADD COLUMN source_ VARCHAR(96) NOT NULL;

I added a source_ column to both the load and stage tables. This column captures the origin of the data, which is especially useful when merging data from different sources. In the stage schema, the source_ column is set to NOT NULL to ensure that the source is always specified.

Migrating Data from Load to Stage

Once the tables are prepared, the next step is to move the data from the load schema into the stage schema. This is where we start transforming the raw character data and adding metadata for tracking purposes.

A Word About Industry Codes

In the U.S., the NAICS (pronounced “nakes”) stands for the North American Industry Classification System. These codes classify businesses based on their primary activities. For this project, I use the six-digit NAICS code, which provides the most granular detail about specific industries compared to the broader two- or four-digit codes in the system.

Note that NAICS is not the only industry classification system. There are others.

Updating Model Assignments

In the stage schema, I need to update model-specific metadata and create unique model names by business unit. Here’s how I do it:

-- Assigning source and name to models by business unit

UPDATE stage.model SET source_ = 'kds_discovery hybrid LLM', name_ = 'model_01' WHERE biz_unit = 'Sales and Marketing Unit';

UPDATE stage.model SET source_ = 'kds_discovery hybrid LLM', name_ = 'model_02' WHERE biz_unit = 'Customer Support and Service Unit';

I’m assigning model names like model_01 and model_02 for different business units. This simplifies the example, but hardcoded values like this aren't scalable. In practice, dynamic naming based on business logic would be more efficient and maintainable.

Inserting Questions with JSON Syntax

Some fields require more complex formatting. For example, I wanted to cast fields like syntax_ and help_ as JSON for easier manipulation.

-- Casting question syntax as JSON and inserting into stage.model_question

INSERT INTO stage.model_question (nasic_code, business_unit, role_, model_topic, topic_, subtopic_, syntax_, help_, type_, sort_order, location_reference, create_date, modified_date, source_)

SELECT nasic_code, business_unit, role_, model_topic, topic_, subtopic_, ('"' || syntax_ || '"')::jsonb, ('"' || help_ || '"')::jsonb, type_, sort_order, location_reference, create_date, modified_date, 'kds_discovery Hybrid LLM'

FROM load.model_question;

Populating Model Roles and Business Units

After staging the data, I populate the model schema by inserting roles and business units based on the data in stage.

-- Inserting roles for the Sales and Marketing Unit

INSERT INTO model.role (name_, created_by, source_)

SELECT role_, 'sys admin', 'kds_discovery LLM hybrid'

FROM stage.model WHERE biz_unit = 'Sales and Marketing Unit' GROUP BY role_;

Here, I’m inserting roles for the Sales and Marketing Unit into the model.role table, ensuring proper organization and structure for the data discovery process.

Inserting Author Information

-- Inserting author metadata

INSERT INTO model.author (author_id, author_name, email_, start_date, create_date, source_)

VALUES (gen_random_uuid(), 'kds discovery author', 'kds@kds_discovery.com', CURRENT_DATE, CURRENT_DATE, 'kds_discovery');

This step helps me track who created the models and when, adding another layer of transparency and governance to the process.

Final Steps and Wrapping Up

After staging, the data is inserted into the model schema, like this;

-- Final model loading into discovery and question tables

INSERT INTO model.discovery (nasic_code, unit_id, role_id, author_id, title_, model_topic, start_date, source_)

SELECT s.nasic_code, bu.unit_id, r.role_id,

(SELECT author_id FROM model.author WHERE author_name = 'kds discovery author'),

'Model Test', 'Model Topic Test', CURRENT_DATE, 'kds_discovery hybrid LLM'

FROM stage.model s

INNER JOIN model.business_unit bu ON s.biz_unit = bu.name_

INNER JOIN model.role r ON s.role_ = r.name_;

This final step connects the staged data across multiple dimensions—industry, business unit, role, and author—forming the foundation for the data discovery process.

The Staging Process and Next Steps

In this post, I outlined the staging process for transforming raw data by assigning data types, managing keys, and adding metadata like sources and model names. Key steps included migrating data from the load schema, updating model-specific fields, and inserting records from the stage schema tables into the model schema tables. This process prepares the data for long-term use and traceability in the database.

Next time, I'll describe the loading of the client and interview schema tables.

If you want to try loading the kds_discovery database with the data described here, see the updated section under "Database Installation" in the README file here.

As always, thank you for stopping by.