Designing a Data Source Discovery App - Part 12: Summarizing Interview Data

by DL Keeshin

February 28, 2025

Phase One

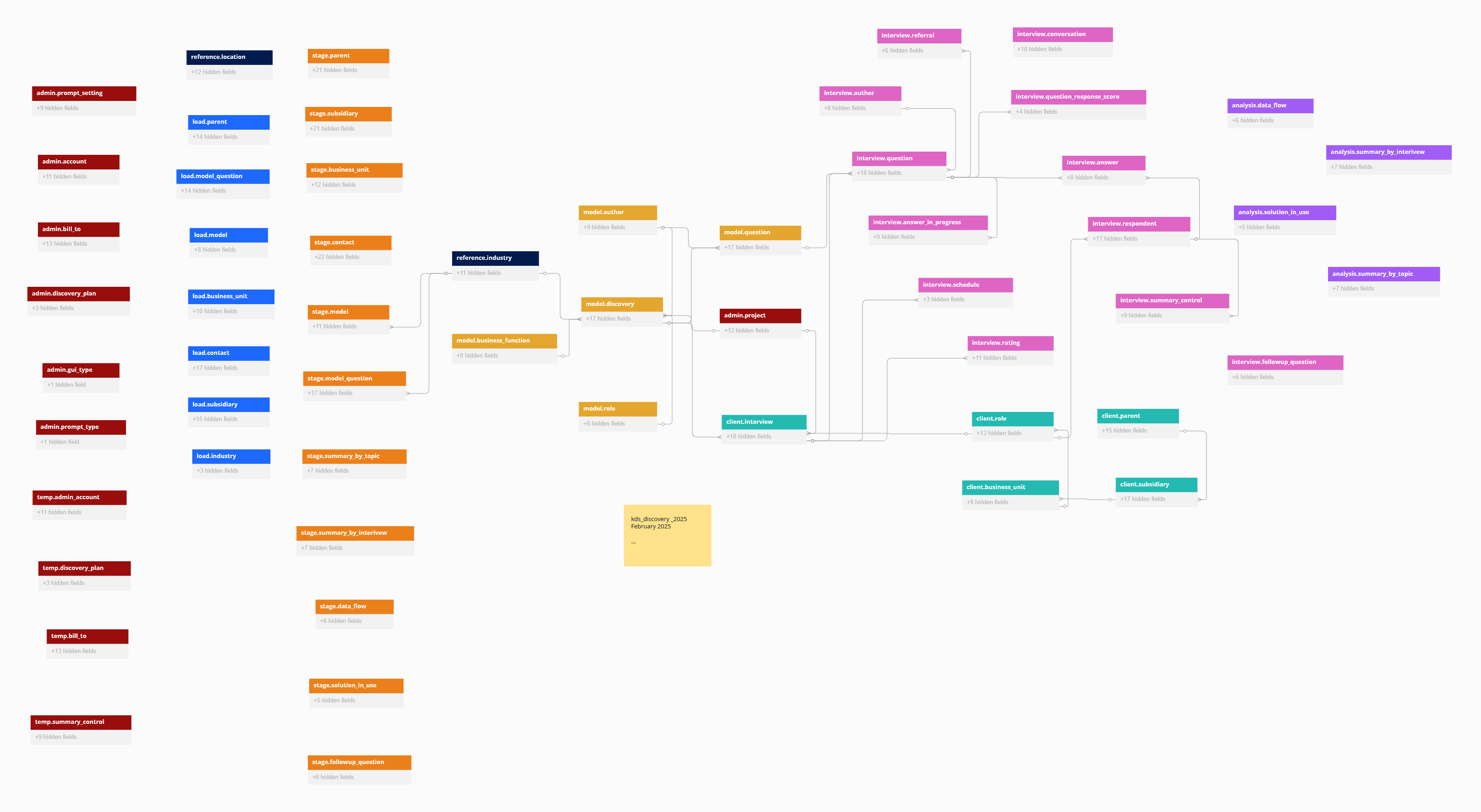

In my last post, I discussed recent kDS Discovery App database changes. Today, I'll discuss the design strategy for the summary process that pulls key insights from interview responses. In phase one of the process, we break things down by respondent and topic data. In phase two, we zoom out to the interview level and suggest some initial recommendations. This post walks through the Python script behind phase one, including the new SQL tables that keep everything organized.

What This Script Does

The generate_first_summary.py Python script helps make sense of interview responses by structuring them based on topic and respondent. It taps into OpenAI’s GPT model. It generates summaries that highlight key points, data flows, system mentions, and even follow-up questions. Once generated, these summaries get stored in the PostgreSQL database for future use.

Key SQL Tables in Play

- temp.summary_control – This is a temporary development table that tracks each summary run, assigns a control ID, and logs details like character count, answer count, and timestamps. Once finalized, it will be replaced by

interview.summary_control.

Table temp.summary_control {

control_id uuid [pk, not null, default: `gen_random_uuid()`]

interview_id uuid [not null]

respondent_id uuid [not null]

start_date timestamptz [not null]

prompt_id UUID

character_count bigint

answer_count smallint

create_date date [not null]

source_ varchar(96) [not null]

}interview.summary_by_topic.

Table stage.summary_by_topic {

by_topic_id uuid [pk, not null, default: `gen_random_uuid()` ]

control_id UUID [not null]

topic_ varchar(96) [not null]

summary_ jsonb [not null]

summary_date timestamptz

create_date date [not null]

source_ varchar(96) [not null]

}How It Works

- Set Up the Database and Generate a Control ID

- Connects to the database using environment variables.

db_params = { "dbname": os.getenv("DBSURVEY"), "user": os.getenv("DBUSER"), "password": os.getenv("DBPASSWORD"), "host": os.getenv("DBHOST"), "port": os.getenv("DBPORT") } - Inserts a record into

temp.summary_controlto log key details. - Fetches the control ID for tracking.

- Grab and Structure the Data

- Gets interview batches sorted by topic and respondent.

def get_interview_batches(connection): return execute_query(connection, """ SELECT row_number() OVER (ORDER BY interview_id, topic_) AS batch_number, interview_id, topic_, respondent_id FROM interview.vw_answer_lookup GROUP BY topic_, interview_id, respondent_id ORDER BY 3; """) - Fetches industry, function, and role details for business context.

- Collects relevant questions and answers.

- Generate AI Summaries

- Builds a structured prompt with interview details.

def create_prompt(business_key, responses, control_id): # Current timestamp current_time = datetime.now().strftime('%Y-%m-%d %H:%M:%S') qa_text = "\n".join([f"Question (Subtopic: {resp['subtopic']}): {resp['question']}\nAnswer: {resp['answer']}\n" for resp in responses]) return f""" Summarize the following interview answers from a {business_key['role_name']} in the {business_key['function_name']} of a {business_key['industry_description']} organization by topic and subtopic. Identify any data flows between source and destinations. Note any system or product names in use. Suggest a relevant follow-up question to gain deeper insights. Include the control_id {control_id} in the output. Respond in the following JSON format: {{ "analysis": [ {{ "control_id": "{control_id}", "summary": "", "summary_date": "{current_time}", "noted_data_flow_source": " ", "noted_data_flow_destination": " ", "solution_in_use": " ", "follow_up_question": " " }} ] }} Here are the interview responses: {qa_text} """ - Calls OpenAI to create a JSON summary that includes key insights, data flow sources and destinations, system mentions, and a follow-up question .

- Store the Summaries

- Saves the structured summary into

stage.summary_by_topic, linked to the control ID.

def insert_summary_by_topic(connection, control_id, topic, summary_json): execute_query(connection, """ INSERT INTO stage.summary_by_topic (control_id, topic_, summary_, summary_date, create_date, source_) VALUES (%s, %s, %s, NOW(), CURRENT_DATE, 'interview summary script'); """, (control_id, topic, json.dumps(summary_json)), fetch=False) - Saves the structured summary into

def generate_summary_control(connection):

execute_query(connection, """

INSERT INTO temp.summary_control (

interview_id, respondent_id, start_date, character_count, answer_count, create_date, source_)

SELECT interview_id, respondent_id, CURRENT_DATE, SUM(LENGTH(answer_::TEXT)),

COUNT(answer_id), CURRENT_DATE, 'kds admin' FROM interview.vw_answer_lookup

GROUP BY interview_id, respondent_id;

""", fetch=False)

result = execute_query(connection, "SELECT control_id FROM temp.summary_control ORDER BY create_date DESC LIMIT 1;")

return result[0][0] if result else None

def get_business_key(connection, topic, interview_id, respondent_id):

result = execute_query(connection, """

SELECT industry_description, function_name, role_name FROM interview.vw_answer_lookup

WHERE topic_ = %s AND interview_id = %s AND respondent_id = %s

GROUP BY industry_description, function_name, role_name;

""", (topic, interview_id, respondent_id))

return {"industry_description": result[0][0], "function_name": result[0][1], "role_name": result[0][2]} if result else None

def get_interview_responses(connection, topic, interview_id, respondent_id):

result = execute_query(connection, """

SELECT sort_order, topic_, subtopic_, syntax_ AS question_, answer_ FROM interview.vw_answer_lookup

WHERE topic_ = %s AND interview_id = %s AND respondent_id = %s ORDER BY 1;

""", (topic, interview_id, respondent_id))

return [{"sort_order": row[0], "topic": row[1], "subtopic": row[2], "question": row[3], "answer": row[4]} for row in result] if result else None

@retry(stop=stop_after_attempt(3), wait=wait_exponential(multiplier=1, min=4, max=10))

def make_openai_call(model="gpt-4-turbo", prompt="", temperature=0.7, max_tokens=2000):

try:

response = openai.ChatCompletion.create(

model=model,

messages=[{"role": "user", "content": prompt}],

temperature=temperature,

max_tokens=max_tokens

)

response_content = response['choices'][0]['message']['content'].strip()

response_content = re.sub(r"```json\n|```", "", response_content).strip()

try:

return json.loads(response_content)

except json.JSONDecodeError:

return {"analysis": [{"summary": response_content}]}

except Exception as e:

print(f"Error calling OpenAI: {e}")

raise

The entire script can be seen on Github.

Use of Inline SQL and JSON

This script is a prototype. It contains a lot of inline SQL, which is common in development, especially when AI-generated code is involved. In later development steps, we will optimize these queries and likely move most into pSQL functions for data retrieval and stored procedures for data modification. This shift will make the code easier to maintain, improve performance, and ensure better separation between logic and database operations. Additionally, the script contains inline JSON which will eventually be stored in a jsonb field in pSQL for better query performance and flexibility.

A Word About JSON

JSON is the preferred file format for kDS Discovery App data integration. The reasons are simple: it is semi-structured, and most popular databases have native support for it. JSON is less prone to errors compared to CSV files, which can be problematic with embedded single and double quotes. We keep files as small as possible and use more frequent transmissions and loads. Staging tables are ideal for parsing important JSON keys and values, and Python excels at this task.

What’s Next?

Phase one sets the stage by summarizing at the respondent level. In phase two, we’ll:

- Roll up summaries at the interview level

- Identify bigger trends

- Generate initial recommendations

By making interview data more structured and searchable, the kDS Discovery App is becoming an even better tool for extracting insights.

Collaborators?

We are actively seeking organizations interested in testing a beta version of the kDS Data Source Discovery App. If you or someone you know is interested, please let us know. We’d love to collaborate. Tell us in the comments below. As always, thanks for stopping by!